Unmasking Biases in Machine Learning: Understanding the Challenges and Seeking Solutions

In the rapidly evolving landscape of artificial intelligence (AI) and machine learning (ML), the deployment of algorithms to make decisions in various domains has become increasingly prevalent. However, a critical concern that has emerged is the presence of biases in these systems. Biases in machine learning refer to the systematic errors or prejudices that may be embedded in algorithms, leading to unfair or discriminatory outcomes. This article explores the complexities surrounding biases in machine learning, the potential consequences, and the ongoing efforts to mitigate these challenges.

I. The Nature of Biases in Machine Learning

A. Implicit Biases in Data

One of the primary sources of bias in machine learning is the data used to train these algorithms. If the training data is biased, the model is likely to learn and perpetuate those biases. Historical data, which often reflects societal biases, can introduce systemic inequalities into the algorithms. For example, if a hiring algorithm is trained on historical data that favors certain demo graphics; it may inadvertently perpetuate discrimination in future hiring decisions.

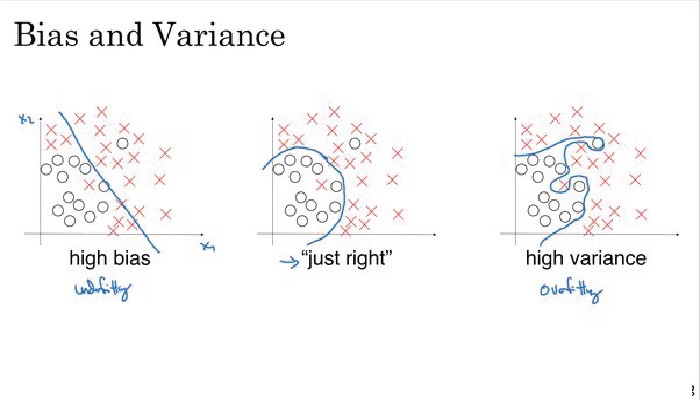

B. Algorithmic Biases

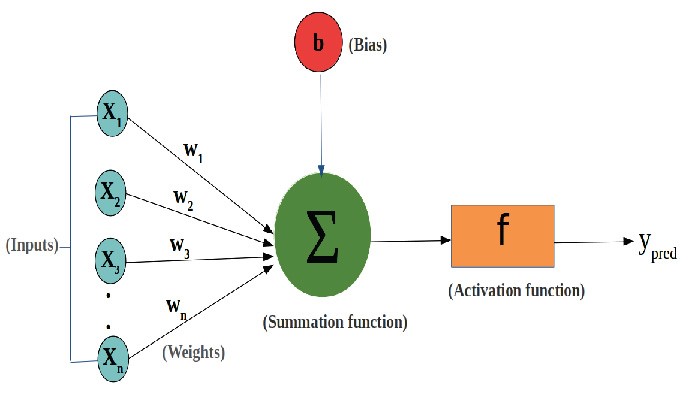

Beyond data biases, machine learning models can develop algorithmic biases during the training process. These biases can arise due to the model’s design, its learning mechanisms, or the choice of optimization techniques. Understanding and mitigating these biases require a deep understanding of the underlying algorithms and the impact of their various parameters.

II. Real-world Consequences of Biases in Machine Learning

A. Unintended Discrimination

Biased machine learning models can lead to unintended discrimination against certain groups. For instance, facial recognition systems trained on predominantly lighter-skinned faces may struggle to accurately identify individuals with darker skin tones, resulting in discriminatory outcomes. This can have profound consequences in areas such as law enforcement, finance, and healthcare, where biased decisions can disproportionately affect marginalized communities.

B. Reinforcement of Stereotypes

Machine learning models may inadvertently reinforce existing societal stereotypes present in the training data. For example, an AI-powered resume screening tool trained on historical data may favor male candidates over female candidates, perpetuating gender biases in the hiring process. Recognizing and addressing these biases is crucial to building fair and equitable AI systems.

III. Challenges in Detecting and Mitigating Biases

A. Lack of Transparency

One of the significant challenges in addressing biases in machine learning is the lack of transparency in many algorithms. Black-box models, which do not reveal their decision-making processes, make it challenging to identify and rectify biases. Efforts to enhance transparency in AI models are crucial for building trust and understanding the mechanisms driving algorithmic decisions.

B. Evolving Nature of Biases

Biases in machine learning are dynamic and can evolve over time. As societal norms and values change, algorithms may need continuous monitoring and adaptation to prevent the perpetuation of outdated or harmful biases. This requires a proactive approach to bias detection and mitigation throughout the lifecycle of AI systems.

IV. Ethical Considerations and Responsible AI Development

A. Ethical Frameworks in Machine Learning

Developing and deploying machine learning models necessitates a strong ethical foundation. Adopting ethical frameworks in AI development ensures that considerations of fairness, transparency, and accountability are embedded into the design and deployment of algorithms. Ethical AI practices are essential for building responsible and trustworthy systems.

B. Inclusive Data Collection and Representation

To address biases at their root, it is crucial to prioritize inclusive data collection and representation. Diverse datasets that accurately reflect the population being served can help reduce biases in machine learning models. Proactive efforts to collect representative data contribute to more equitable AI systems.

V. Ongoing Efforts to Combat Biases in Machine Learning

A. Bias Detection Tools

Researchers and practitioners are actively developing tools to detect biases in machine learning models. These tools aim to provide insights into the decision-making processes of algorithms and identify potential sources of bias. Continuous monitoring and evaluation using such tools are essential for ensuring fair and unbiased AI applications.

B. Explainable AI (XAI)

Explainable AI, or XAI, focuses on creating machine learning models that can provide transparent explanations for their decisions. By enhancing the interpretability of algorithms, XAI enables users to understand how and why a model reaches a specific conclusion. This helps identify and rectify biases, fostering accountability and trust in AI systems.

Conclusion

As machine learning continues to permeate various aspects of our lives, understanding and addressing biases in these systems becomes paramount. The challenges associated with biases in machine learning require collaborative efforts from researchers, developers, policymakers, and society at large. By prioritizing ethical considerations, promoting transparency, and embracing inclusive practices, we can work towards creating a future where AI systems are fair, equitable, and responsible.