The Evolution of Machine Learning Algorithms: From Perceptron’s to Neural Networks

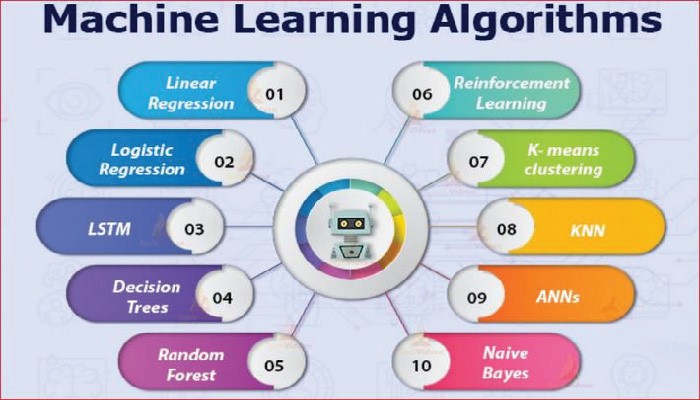

Machine learning algorithms have undergone a remarkable evolution over the past few decades, transforming from basic models to sophisticated, complex systems. This evolution has been driven by advancements in technology, increased computational power, and a deeper understanding of the underlying principles of artificial intelligence. In this article, we will delve into the journey of machine learning algorithms, exploring their origins, major milestones, and the emergence of cutting-edge techniques that define the current landscape.

1. The Birth of Machine Learning: Perceptron’s and Rule-Based Systems

The roots of machine learning can be traced back to the 1950s, with the development of perceptron’s and rule-based systems. The perceptron, introduced by Frank Rosenblatt in 1957, marked a significant milestone as one of the earliest attempts to create a machine that could learn from experience. However, perceptron’s had limitations, primarily being able to handle only linearly separable data.

Rule-based systems, on the other hand, were built on predefined rules and expert knowledge. These systems were effective in certain domains but lacked the adaptability required for more complex tasks. Despite their limitations, these early models laid the foundation for future advancements in machine learning.

2. Rise of Decision Trees and Random Forests

In the 1980s and 1990s, decision trees emerged as a popular approach in machine learning. Decision trees allowed for more complex decision-making by recursively splitting data into subsets based on specific criteria. However, they were prone to over fitting and lacked generalization capabilities.

To address these issues, the concept of ensemble learning gained prominence, leading to the development of random forests. Random forests leveraged multiple decision trees to make more robust predictions, combining the strengths of individual models while mitigating their weaknesses. This era marked a shift towards more sophisticated algorithms capable of handling diverse and complex datasets.

3. Support Vector Machines and Kernel Methods

The late 1990s saw the rise of support vector machines (SVMs), a powerful algorithm for classification and regression tasks. SVMs aimed to find the hyper plane that best separated data into distinct classes, maximizing the margin between them. Kernel methods were introduced to extend SVMs to handle nonlinear relationships within the data, allowing for more flexibility in capturing complex patterns.

SVMs became popular in various fields, including image recognition and bioinformatics, showcasing their effectiveness in diverse applications. The introduction of kernel methods added another layer of versatility, paving the way for more sophisticated and nuanced learning.

4. Neural Networks: The Resurgence

While neural networks had been proposed in the 1940s, they fell out of favor due to limitations in computational power and data availability. However, the 21st century witnessed a resurgence of interest in neural networks, fueled by increased computing capabilities and the availability of vast amounts of data.

The development of deep learning techniques, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), revolutionized the field. CNNs excelled in image and pattern recognition, while RNNs proved effective in handling sequential data, such as natural language processing. The combination of deep learning and massive datasets led to breakthroughs in areas like speech recognition, image classification, and language translation.

5. Unsupervised Learning: Clustering and Dimensionality Reduction

As machine learning evolved, there was a growing emphasis on unsupervised learning techniques, where algorithms learn from unlabeled data. Clustering algorithms, such as K-means and hierarchical clustering, emerged to group similar data points together. These methods found applications in customer segmentation, anomaly detection, and pattern recognition.

Dimensionality reduction techniques, like principal component analysis (PCA) and t-distributed stochastic neighbor embedding (t-SNE), became essential for simplifying complex datasets. These approaches contributed to improving model efficiency, interpretability, and generalization.

6. Reinforcement Learning and Self-Learning Systems

In recent years, reinforcement learning has gained prominence as a powerful paradigm for training models to make sequential decisions. This approach has been particularly successful in training machines for tasks like game playing, robotic control, and autonomous systems.

Self-learning systems, influenced by unsupervised learning, are designed to continuously improve without explicit human intervention. These systems leverage techniques such as generative adversarial networks (GANs) to create synthetic data for training, enabling models to adapt to changing environments and unforeseen challenges.

7. Explainable AI and Ethical Considerations

With the increasing complexity of machine learning models, there is a growing need for transparency and interpretability. Explainable AI (XAI) aims to enhance the understanding of model predictions, making them more accessible and trustworthy. This development is crucial for applications in healthcare, finance, and other domains where the interpretability of results is paramount.

Ethical considerations surrounding machine learning have also gained prominence, emphasizing the importance of fairness, accountability, and transparency. The evolution of algorithms must be accompanied by a commitment to addressing biases, ensuring responsible AI development, and protecting user privacy.

Conclusion:

The evolution of machine learning algorithms has been a fascinating journey, marked by continuous innovation and breakthroughs. From the early days of perceptron’s and rule-based systems to the current era of deep learning and self-learning systems, the field has experienced unprecedented growth. As we look ahead, the challenges of interpretability, fairness, and ethical considerations will shape the future of machine learning, pushing researchers and practitioners to develop more robust and responsible AI systems. The journey is far from over, and the next chapter promises even more exciting developments in the world of machine learning.