Mastering the Future: A Deep Dive into Reinforcement Learning in Artificial Intelligence

Artificial Intelligence (AI) has evolved dramatically over the years, and one of the most promising and exciting branches within this field is reinforcement learning (RL). Reinforcement learning in Artificial Intelligence, enabling machines to learn and make decisions through interactions with their environment. In this article, we will explore the foundations, mechanisms, applications, and future prospects of reinforcement learning.

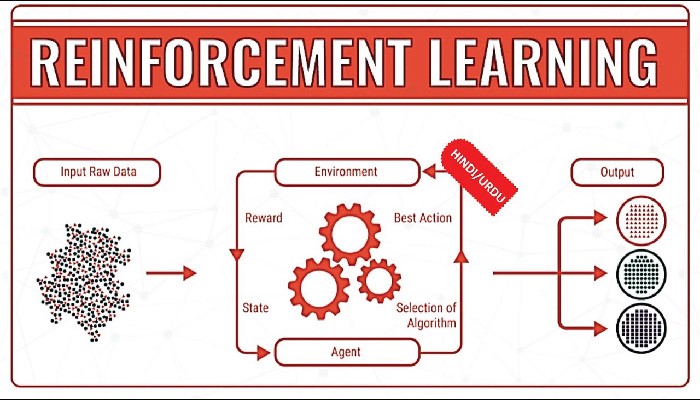

Understanding Reinforcement Learning

Reinforcement learning is a type of machine learning where an agent learns to make decisions by interacting with an environment. The agent receives feedback in the form of rewards or penalties based on its actions, allowing it to learn and optimize its decision-making process over time. Unlike supervised learning, where the algorithm is trained on labeled data, reinforcement learning involves learning from trial and error.

Key Components of Reinforcement Learning

Agent:

At the core of reinforcement learning is the agent, which is the entity responsible for making decisions and taking actions within a given environment.

Environment:

It provides feedback to the agent based on the actions taken. Environments in reinforcement learning can range from simple grid worlds to complex simulations and real-world scenarios.

State:

The agent’s decision-making process is influenced by the state it is in, and the goal is to learn a policy that maps states to actions to maximize the expected reward.

Action:

Actions are the decisions or moves made by the agent in response to the current state. The set of possible actions is defined by the environment, and the agent’s task is to learn the optimal actions for each state.

Reward:

Rewards serve as the feedback mechanism in reinforcement learning. The agent receives positive or negative rewards based on its actions, guiding it towards learning behaviors that lead to desirable outcomes. The cumulative reward over time is a crucial factor in evaluating the success of the learning process.

Reinforcement Learning Algorithms

Several reinforcement learning algorithms have been developed to train agents effectively. Two prominent classes of algorithms are:

Value-Based Methods:

Value-based methods aim to find the optimal value function, representing the expected cumulative reward for each state or state-action pair. Q-learning and Deep Q Network (DQN) are popular examples that use value functions to guide the agent’s decision-making process.

Policy-Based Methods:

Policy-based methods directly learn the optimal policy, mapping states to actions, without explicitly calculating value functions. Policy Gradient and Proximal Policy Optimization (PPO) are widely used algorithms in this category, offering flexibility in handling continuous action spaces.

Applications of Reinforcement Learning

Game Playing:

Reinforcement learning has gained significant attention in the domain of game playing. Notable examples include Alpha Go, which defeated world champion Go players, and OpenAI’s Dota 2 bot, showcasing the ability of RL algorithms to master complex strategies.

Robotics:

In robotics, reinforcement learning enables robots to learn tasks through physical interactions with their environment. This includes tasks such as grasping objects, navigating environments, and even more sophisticated actions like cooking.

Autonomous Vehicles:

Reinforcement learning plays a pivotal role in the development of autonomous vehicles. Agents can learn optimal driving policies by interacting with simulated environments, allowing them to navigate complex traffic scenarios and make intelligent decisions.

Finance and Trading:

The financial sector benefits from reinforcement learning in algorithmic trading, portfolio optimization, and risk management. RL algorithms can adapt to changing market conditions and optimize trading strategies over time.

Challenges and Future Directions

Sample Efficiency:

One significant challenge in reinforcement learning is the need for a large number of interactions with the environment to achieve optimal performance. Enhancing sample efficiency is a crucial area of research to make RL algorithms more practical for real-world applications.

Generalization:

RL algorithms often struggle with generalizing knowledge from one environment to another. Developing methods that enable agents to transfer learned knowledge across diverse scenarios remains an active area of exploration.

Exploration-Exploitation Tradeoff:

Striking the right balance between exploration and exploitation is essential for effective learning. Research is ongoing to devise methods that enable agents to explore efficiently while still exploiting known information to maximize cumulative rewards.

Real-World Robustness:

Deploying RL models in the real world poses challenges related to safety and robustness. Ensuring that agents make reliable decisions in dynamic and unpredictable environments is a key focus for researchers and practitioners.

Conclusion

Reinforcement learning represents a groundbreaking approach to artificial intelligence, enabling machines to learn and adapt through interaction with their surroundings. As the field continues to evolve, addressing challenges related to sample efficiency, generalization, and real-world robustness will be crucial for widespread adoption. The applications of reinforcement learning span various domains, from gaming and robotics to finance and autonomous vehicles, showcasing its versatility and potential impact on shaping the future of AI. As researchers delve deeper into these challenges and push the boundaries of what is possible, the era of intelligent, adaptive machines seems closer than ever.